Popular large language models (LLMs) like OpenAI’s ChatGPT and Google’s Bard are energy-intensive, requiring massive server farms to provide enough data to train the powerful programs. Cooling those same data centers also makes the AI chatbots incredibly thirsty. New research suggests training for GPT-3 alone consumed 185,000 gallons (700,000 liters) of water. An average user’s conversational exchange with ChatGPT basically amounts to dumping a large bottle of fresh water out on the ground, according to a new study. Given the chatbot’s unprecedented popularity , researchers fear all those spilled bottles could take a troubling toll on water supplies, especially amid historic droughts and looming environmental uncertainty in the US.

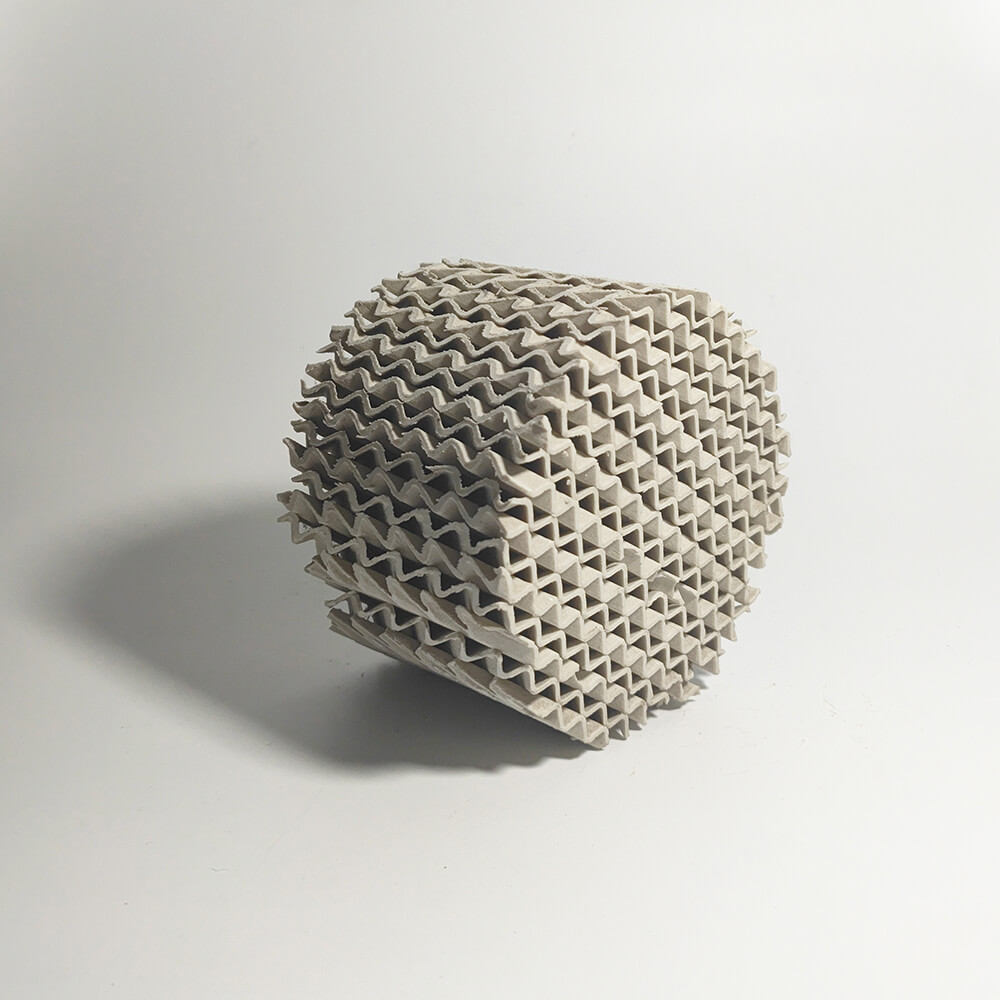

Researchers from the University of California Riverside and the University of Texas Arlington published the AI water consumption estimates in a pre-print paper titled “Making AI Less ‘Thirsty. ’” The authors found the amount of clear freshwater required to train GPT-3 is equivalent to the amount needed to fill a nuclear reactor’s cooling tower . OpenAI has not disclosed the length of time required to train GPT-3, complicating the researchers’ estimations, but Microsoft, which has struck a multi-year, multi-billion-dollar partnership with the AI startup and built supercomputers for AI training, says that its latest supercomputer, which would require an extensive cooling apparatus, contains 10,000 graphics cards and over 285,000 processor cores, giving a glimpse into the vast scale of the operation behind artificial intelligence. That huge number of gallons could produce battery cells for 320 Teslas, or, put another way, ChatGPT, which came after GPT-3, would need to “drink” a 500-milliliter water bottle in order to complete a basic exchange with a user consisting of roughly 25-50 questions. Intalox Snowflake High Performance Packing

The gargantuan number of gallons needed to train the AI model also assumes the training is happening in Microsoft’s state-of-the-art US data center, built especially for OpenAI to the tune of tens of millions. If the data was trained in the company’s less energy-efficient Asia data center, the report notes water consumption could be three times higher. The researchers expect these water requirements will only increase further with newer models, like the recently released GPT-4 , which rely on a larger set of data parameters than their predecessors.

“AI models’ water footprint can no longer stay under the radar,” the researchers said. “Water footprint must be addressed as a priority as part of the collective efforts to combat global water challenges.”

When calculating AI’s water consumption, the researchers draw a distinction between water “withdrawal” and “consumption.” The first example is the practice of physically removing water from a river, lake, or other source, while consumption refers specifically to the loss of water by evaporation when it’s used in data centers. The research on AI’s water usage focuses primarily on the consumption part of that equation, where the water can’t be recycled.

Anyone who’s spent a few seconds in a company server room knows you need to pack a sweater first. Server rooms are kept cool, typically between 50 and 80 degrees Fahrenheit to prevent equipment from malfunctioning. Maintaining that ideal temperature is a constant challenge because the servers themselves convert their electrical energy into heat. Cooling towers like the ones shown below are often deployed to try and counteract that heat and keep the rooms in their ideal temperature by evaporating cold water.

Cooling towers get the job done, but they require immense amounts of water to do so. The researchers estimate around a gallon of water is consumed for every kilowatt-hour expended in an average data center. Not just any type of water can be used, either. Data centers pull from clean, freshwater sources in order to avoid the corrosion or bacteria growth that can come with seawater. Freshwater is also essential for humidity control in the rooms. The researchers likewise hold data centers accountable for the water needed to generate the high amounts of electricity they consume, something the scientists called “off-site indirect water consumption.”

Water consumption issues aren’t limited to OpenAI or AI models. In 2019, Google requested more than 2.3 billion gallons of water for data centers in just three states. The company currently has 14 data centers spread out across North America which it uses to power Google Search, its suite of workplace products, and more recently, its LaMDa and Bard large language models. LaMDA alone, according to the recent research paper, could require millions of liters of water to train, larger than GPT-3 because several of Google’s thirsty data centers are housed in hot states like Texas; researchers issued a caveat with this estimation, though, calling it an “ approximate reference point.”

Aside from water, new LLMs similarly require a staggering amount of electricity. A Stanford AI report released last week looks at differences in energy consumption among four prominent AI models , estimating OpenAI’s GPT-3 released 502 metric tons of carbon during its training. Overall, the energy needed to train GPT-3 could power an average American’s home for hundreds of years.

“The race for data centers to keep up with it all is pretty frantic,” Critical Facilities Efficiency Solution CEO Kevin Kent said in an interview with Time. “They can’t always make the most environmentally best choices.”

Already, the World Economic Forum estimates some 2.2 million US residents lack water and basic indoor plumbing. Another 44 million live with “inadequate” water systems. Researchers fear a combination of climate change and increased US populations will make those figures even worse by the end of the century. By 2071, Stanford estimates nearly half of the country’s 204 freshwater basins will be unable to meet monthly water demands. Many regions could reportedly see their water supplies cut by a third in the next 50 years.

Rising temperatures partially fueled by human activity have resulted in the American West recording its worst drought in 1,000 years which also threatens freshwater, though recent flooding rains have helped stave off some dire concerns. Water levels at reservoirs like Lake Mead have receded so far that they’ve exposed decades old human remains . All of that means AI’s hefty water demands will likely become a growing point of contention, especially if the tech is embedded into ever more sectors and services. Data requirements for LLMs are only getting larger, which means companies will have to find ways to increase their data centers’ water efficiency.

Researchers say there are some relatively clear ways to bring AI’s water price tag down. For starters, where and when AI models are trained matters. Outside temperatures, for example, can affect the amount of water required to cool data centers. AI companies could hypothetically train models at midnight when it’s cooler or in a data center with better water efficiency to cut down on usage. Chatbot users, on the other hand, could opt to engage with the modules during “water-efficient hours,” much as municipal authorities encourage off-hours dishwasher use. Still, any of those demand-side changes will require greater transparency on the part of tech companies building these models, something the researchers say is in worryingly short supply.

“We recommend AI model developers and data center operators be more transparent,” the researchers wrote. “When and where are the AI models trained? What about the AI models trained and/or deployed in third-party colocation data centers or public clouds? Such information will be of great value to the research community and the general public.”

Pall Packing Want to know more about AI, chatbots, and the future of machine learning? Check out our full coverage of artificial intelligence , or browse our guides to The Best Free AI Art Generators and Everything We Know About OpenAI’s ChatGPT .